The Magic of Embeddings

原文:Stack: The Magic of Embeddings (convex.dev)

How similar are the strings “I care about strong ACID guarantees” and “I like transactional databases”? While there’s a number of ways we could compare these strings—syntactically or grammatically for instance—one powerful thing AI models give us is the ability to compare these semantically, using something called Embeddings. Given a model, such as OpenAI’s text-embedding-ada-002, I can tell you that the aforementioned two strings have a similarity of 0.784, and are more similar than “I care about strong ACID guarantees” and “I like MongoDB” 😛. With embeddings, we can do a whole suite of powerful things:1

字符串"I care about strong ACID guarantees"和"I like transactional databases"有多相似?虽然我们可以以多种方式比较这些字符串,例如在语法或语法上,但人工智能模型给我们带来的一个强大功能是使用称为嵌入的东西对它们进行语义比较。给定一个模型,例如OpenAI的text-embedding-ada-002,我可以告诉您上述两个字符串的相似度为0.784,并且比"I care about strong ACID guarantees"和"I like MongoDB"更相似 😛。借助嵌入,我们可以执行一整套强大的操作:

- Search (where results are ranked by relevance to a query string)

- 搜索(结果按与查询字符串的相关性排序)

- Clustering (where text strings are grouped by similarity)

- 聚类(将文本字符串按相似性分组)

- Recommendations (where items with related text strings are recommended)

- 推荐(根据相关的文本字符串推荐相关项)

- Anomaly detection (where outliers with little relatedness are identified)

- 异常检测(识别与相关性较小的异常值)

- Diversity measurement (where similarity distributions are analyzed)

- 多样性度量(分析相似性分布)

- Classification (where text strings are classified by their most similar label)

- 分类(根据最相似的标签对文本字符串进行分类)

This article will look at working with raw OpenAI embeddings.

本文将介绍如何使用原始的OpenAI嵌入进行工作。

什么是嵌入

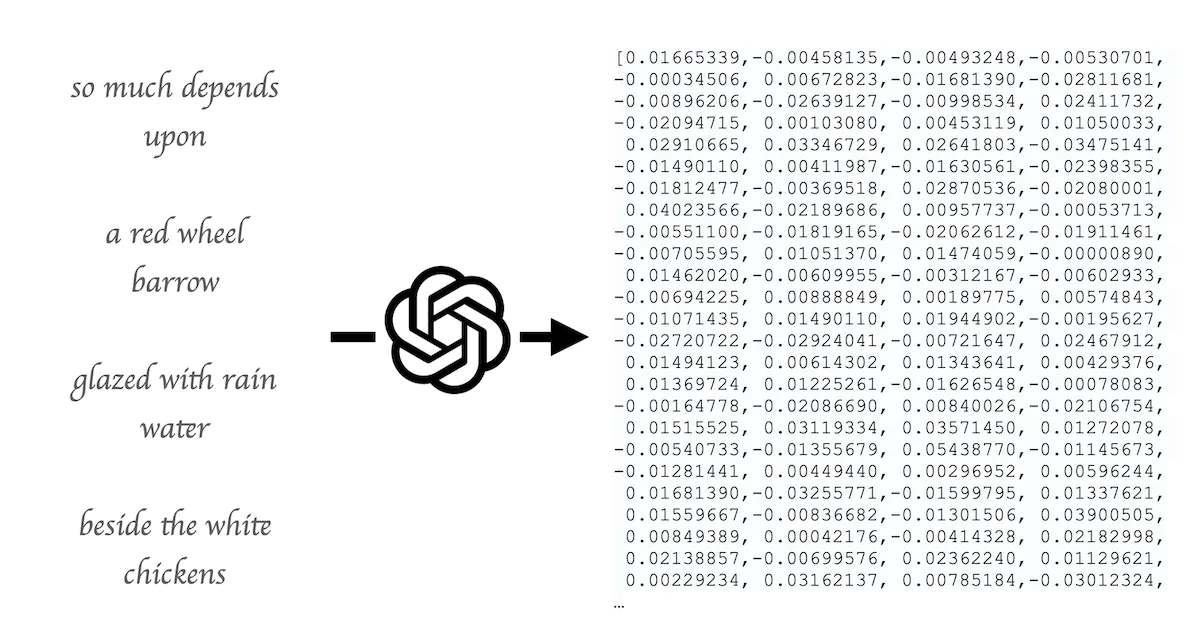

An embedding is ultimately a list of numbers that describe a piece of text, for a given model. In the case of OpenAI’s model, it’s always a 1,536-element-long array of numbers. Furthermore, for OpenAI, the numbers are all between -1 and 1, and if you treat the array as a vector in 1,536-dimensional space, it has a magnitude of 1 (i.e. it’s “normalized to length 1” in linear algebra lingo).

嵌入最终是描述给定模型下一段文本的一组数字列表。在OpenAI模型的情况下,它始终是一个由1,536个元素组成的数组。此外,对于OpenAI模型,这些数字都在-1和1之间,如果将数组视为1,536维空间中的向量,它的大小为1(即在线性代数术语中称为“长度归一化”)

On a conceptual level, you can think of each number in the array as capturing some aspect of the text. Two arrays are considered similar to the degree that they have similar values in each element in the array. You don’t have to know what any of the individual values correspond to—that’s both the beauty and the mystery of embeddings—you just need to compare the resulting arrays. We’ll look at how to compute this similarity below.

在概念层面上,您可以将数组中的每个数字视为捕捉文本的某个方面。两个数组在每个元素上具有相似的值的程度上被认为是相似的。您不需要知道每个单独值对应的含义 - 这既是嵌入的美丽之处,也是神秘之处 - 您只需要比较结果数组即可。我们将在下面讨论如何计算这种相似度。

Depending on what model you use, you can get wildly different arrays, so it only makes sense to compare arrays that come from the same model. It also means that different models may disagree about what is similar. You could imagine one model being more sensitive to whether the string rhymes. You could fine-tune a model for your specific use case, but I’d recommend starting with a general-purpose one to start, for similar reasons as to why to generally pick Chat GPT over fine-tuned text generation models.

根据您使用的模型不同,您可能会得到截然不同的数组,因此只有比较来自同一模型的数组才有意义。这也意味着不同的模型可能对相似之处有不同的看法。您可以想象一个模型对字符串是否押韵更敏感。您可以为您的特定用例对模型进行微调,但出于类似的原因,我建议您从一个通用的模型开始,就像选择ChatGPT而不是经过微调的文本生成模型一样。

It’s beyond the scope of this post, but it’s also worth mentioning that we’re just looking at text embeddings here, but there are also models to turn images and audio into embeddings, with similar implications.

这超出了本文的范围,但值得一提的是,我们在这里只关注文本嵌入,但也有将图像和音频转化为嵌入的模型,具有类似的影响。

我该如何获得一个嵌入?

There are a few models to turn text into an embedding. To use a hosted model behind an API, I’d recommend OpenAI, and that’s what we’ll be using in this article. For open-source options, you can check out all-MiniLM-L6-v2 or all-mpnet-base-v2.

有几种将文本转化为嵌入的模型。如果要使用API后面的托管模型,我推荐使用OpenAI,这也是本文中我们将使用的模型。至于开源选项,您可以查看all-MiniLM-L6-v2或all-mpnet-base-v2。

Assuming you have an API key in your environment variables, you can get an embedding via a simple fetch:

假设您在环境变量中有一个API密钥,您可以通过简单的fetch获取一个嵌入:

export async function fetchEmbedding(text: string) {

const result = await fetch("https://api.openai.com/v1/embeddings", {

method: "POST",

headers: {

"Content-Type": "application/json",

Authorization: "Bearer " + process.env.OPENAI_API_KEY,

},

body: JSON.stringify({

model: "text-embedding-ada-002",

input: [text],

}),

});

const jsonresults = await result.json();

return jsonresults.data[0].embedding;

}

For efficiency, I’d recommend fetching multiple embeddings at once in a batch.

为了提高效率,我建议一次批量获取多个嵌入。

export async function fetchEmbeddingBatch(text: string[]) {

const result = await fetch("https://api.openai.com/v1/embeddings", {

method: "POST",

headers: {

"Content-Type": "application/json",

Authorization: "Bearer " + process.env.OPENAI_API_KEY,

},

body: JSON.stringify({

model: "text-embedding-ada-002",

input: [text],

}),

});

const jsonresults = await result.json();

const allembeddings = jsonresults.data as {

embedding: number[];

index: number;

}[];

allembeddings.sort((a, b) => b.index - a.index);

return allembeddings.map(({ embedding }) => embedding);

}

我应该把它存储在哪里?

Once you have an embedding vector, you’ll likely want to do one of two things with it:

一旦您获得了一个嵌入向量,您可能希望对其进行以下两种操作之一:

- Use it to search for similar strings (i.e. search for similar embeddings). 将其用于搜索相似的字符串(即搜索相似的嵌入)。

- Store it to be searched against in the future. 将其存储以供将来进行搜索。

If you plan to store thousands of vectors, I’d recommend using a dedicated Vector database like Pinecone. This allows you to quickly find nearby vectors for a given input, without having to compare against every vector every time. Stay tuned for a future post on using Pinecone alongside Convex.

如果您计划存储数千个向量,我建议使用专用的向量数据库,如Pinecone。这样可以快速找到给定输入的附近向量,而无需每次都与每个向量进行比较。请继续关注关于如何与Convex一起使用Pinecone的未来文章。

If you don’t have many vectors, however, you can just store them directly in a normal database. In my case, if I want to suggest Stack posts similar to a given post or search, I only need to compare against fewer than 100 vectors, so I can just fetch them all and compare them in a matter of milliseconds using the Convex database.

然而,如果您没有太多的向量,您可以直接将它们存储在普通数据库中。在我的情况下,如果我想建议与给定帖子类似的Stack帖子或进行搜索,我只需要与少于100个向量进行比较,所以我可以通过使用Convex数据库在几毫秒内获取它们并进行比较。

我应该如何存储一个嵌入?

If you’re storing your embeddings in Pinecone, stay tuned for a dedicated post on it, but the short answer is you configure a Pinecone “Index” and store some metadata along with the vector, so when you get results from Pinecone you can easily re-associate them with your application data. For instance, you can store the document ID for a row that you want to associate with the vector.

如果您将嵌入存储在Pinecone中,请继续关注专门的文章,但简短的答案是您可以配置一个Pinecone的“索引”并将一些元数据与向量一起存储,这样当您从Pinecone获取结果时,您可以轻松地将它们重新关联到您的应用程序数据。例如,您可以存储与向量关联的行的文档ID。

If you’re storing the embedding in Convex, I’d advise storing it as a binary blob rather than a javascript array of numbers. Convex advises to not store arrays longer than 1024 elements. We can achieve this by converting it into a Float32Array pretty easily in JavaScript:

如果您将嵌入存储在Convex中,我建议将其存储为二进制 blob,而不是JavaScript数组。Convex建议不要存储超过1024个元素的数组。我们可以通过在JavaScript中将其转换为Float32Array来轻松实现这一点:

const numberList = await fetchEmbedding(inputText); // number[]

const floatArray = Float32Array.from(numberList); // Float32Array

const floatBytes = floatArray.buffer; // ArrayBuffer

// Save floatBytes to the DB

// Later, after you read the bytes back out:

const arrayAgain = new Float32Array(bytesFromDB); // Float32Array

You can represent the embedding as a field in a table in your schema:

您可以将嵌入表示为模式中的表字段:

vectors: defineTable({

float32Buffer: v.bytes(),

textId: v.id("texts"),

}),

In this case, I store the vector alongside an ID of a document in the “texts” table.

在这种情况下,我将向量与“texts”表中的文档ID一起存储。

如何在JavaScript中比较嵌入向量

If you’re looking to compare two embeddings from OpenAI without using a vector database, it’s very simple. There’s a few ways of comparing vectors, including Euclidean distance, Dot product, and cosine similarity. Thankfully, because OpenAI normalizes all the vectors to be length 1, they will all give the same rankings! With a simple dot product you can get a similarity score ranging from -1 (totally unrelated) to 1 (incredibly similar). There are optimized libraries to do it, but for my purposes, this simple function suffices:

如果您想在不使用向量数据库的情况下比较两个OpenAI的嵌入向量,这非常简单。有几种比较向量的方法,包括欧氏距离、点积和余弦相似度。值得庆幸的是,因为OpenAI将所有向量归一化为长度1,它们将给出相同的排名!通过简单的点积,您可以获得介于-1(完全无关)和1(非常相似)之间的相似度分数。有优化的库可以完成此操作,但对于我的目的,以下简单的函数就足够了:

/**

* Compares two vectors by doing a dot product.

*

* Assuming both vectors are normalized to length 1, it will be in [-1, 1].

* @returns [-1, 1] based on similarity. (1 is the same, -1 is the opposite)

*/

export function compare(vectorA: Float32Array, vectorB: Float32Array) {

return vectorA.reduce((sum, val, idx) => sum + val * vectorB[idx], 0);

}

示例

In this example, let’s make a function (a Convex query in this case) that returns all of the vectors and their similarity scores in order based on some query vector, assuming a table of vectors as we defined above, and the compare function we just defined.

在这个例子中,假设我们有一个向量表(如上面定义的表),以及我们刚刚定义的比较函数,我们可以创建一个函数(在这种情况下是Convex查询),按照某个查询向量的顺序返回所有向量及其相似度分数。

export const compareTo = query(async ({ db }, { vectorId }) => {

const target = await db.get(vectorId);

const targetArray = new Float32Array(target.float32Buffer);

const vectors = await db.query("vectors").collect();

const scores = await Promise.all(

vectors

.filter((vector) => !vector._id.equals(vectorId))

.map(async (vector) => {

const score = compare(

targetArray,

new Float32Array(vector.float32Buffer)

);

return { score, textId: vector.textId, vectorId: vector._id };

})

);

return scores.sort((a, b) => b.score - a.score);

});

总结

In this post, we looked at embeddings, why they’re useful, and how we can store and use them in Convex. I’ll be making more posts on working with embeddings, including chunking long input into multiple embeddings and using Pinecone alongside Convex soon. Let us know in our Discord what you think!

在本文中,我们讨论了嵌入向量、它们的用途以及如何在Convex中存储和使用它们。我将在以后的文章中继续介绍有关嵌入向量的内容,包括将长输入拆分为多个嵌入向量以及如何与Convex一起使用Pinecone。请在我们的Discord上告诉我们您的想法!

本文作者:Maeiee

版权声明:如无特别声明,本文即为原创文章,版权归 Maeiee 所有,未经允许不得转载!

喜欢我文章的朋友请随缘打赏,鼓励我创作更多更好的作品!